Some time ago I wrote about the IO Blazing Datastore Performance with Fusion-io that I was able to achieve with a single VM, connected to a single VMFS volume, and using thin provisioned VMDK’s. Since then a new version of ESXi 5.0 has been released (U2) and some new drivers and firmware have come out for Fusion-io. I was provided an additional Fusion-io ioDrive1 card to go along side the ioDrive2 (both MLC based), and Micron sent me one of their PCIe cards to test (SLC based). So I thought I’d reset the baseline benchmarks with a single VM utilizing all of the hardware I’ve got and see what performance I could get. I was suitably impressed with Max IOPS > 200K and Max throughput >3GB/s in different tests (see graphs below). This baseline will feed into further testing of ioTurbine for Oracle and MS SQL server, which I will write about once it’s done.

Firstly thanks to Bruce Clarke, Simon Williams and Sergey Omelaenko from Fusion-io for arranging the Fusion-io cards (ioDrive1 640GB and ioDrive2 1.2TB) and to Jared Hulbert from Micron for arranging the Micron 320h card (SLC version) and Dave Edwards at Micron for the experimental drivers and setup advice.

Disclosure: Although Fusion-io provided the two ioDrive cards and Micron provided a 320h SLC card on loan for me to test they are not paying for this article and there is absolutely no commercial relationship between us at this time. The opinions in this article are solely mine and based on the test results from the tests I conducted. Due to the Fusion-io ioDrive cards being MLC based and the Micron card being SLC based the testing could be considered an apples to oranges comparison. SLC performance in general is far higher than MLC at a considerable price delta also.

Test Environment Configuration

As with the previous testing I used two of the Dell T710 Westmere X5650 (2 x 6 Core @ 2.66GHz) based systems from My Lab Environment, one with 1 x ioDrive2 1.2TB and 1 x ioDrive1 640GB Fusion-io cards installed and the other with the Micron 320h, both systems have the cards installed in 8X Gen2 PCIe slots. I configured the cards as a standard VMFS5 datastore via vCenter with Storage IO Control disabled. I installed the IO Memory Fusion-io VSL driver for the Fusion-io host, and the latest experimental driver I was provided on the host with the Micron card. The hosts are running the latest patch version of ESXi 5.0 U2 build 914586, vCenter is version 5.0 U2. Drivers are also available for vSphere 5.1 for the Fusion-io and Micron cards to be used as a datastore and I will retest at a later date.

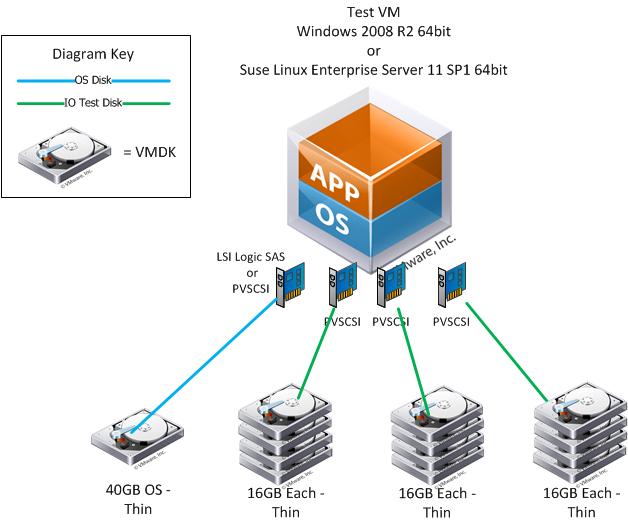

I used a single Windows 2008 R2 64bit VM and a single Suse Linux Enterprise Server 11 SP1 VM for the tests. Only one VM at a time was active on the datastore. Each VM was configured with 8GB RAM, 6 vCPU’s, and 4 SCSI Controllers, one for the OS vmdk and the others for the IO Test VMDK’s as depicted in the diagram below. The Windows 2008 R2 VM used LSI Logic SAS for it’s OS disk and PVSCSI for the IO Test disks, whereas the SLES VM used PVSCSI for all disks. IO Load was only placed on the IO Test disks during my testing. The testing of the 2 x Fusion-io ioDrive cards was done by splitting the VMDK’s evenly over both cards to get the combined performance (second image below).

Micron Test VM Configuration:

2 x Fusion-io Test VM Configuration:

Notice in the diagram above I’m using Thin Provisioning for all of the VMDK’s and all of this is going through a single VMFS5 datastore for the Micron or two VMFS datastores in the case of the Fusion-io tests. So if anyone tries to tell you there is a performance issue with Thin Provisioning or VMFS datastores you can point them to this example.

As with the previous tests to generate the IO load I used the VMware fling from VMware Labs IOBlazer. It was very easy to deploy and use and offers a full range of the normal IO testing capabilities you expect, with the additional advantage of being able to replay IO patterns recorded using vscsistats. In my testing I used standard random and sequential read and write IO patterns of varying sizes. In each test I used 12 worker threads (one for each disk). With the exception of the 1M IO Size test where I used 1 outstanding IO, I used 8 outstanding IO’s per worker thread. I did this to limit the amount of IO queueing in the vSphere kernel due to the very small queue depth on the Fusion-io card (32 OIO’s), which wasn’t a problem though on the Micron (255 OIO’s). As a result queuing in the kernel was kept to a minimum to ensure the best possible latency results. For the Micron tests I used a 4K alignment offset to get the best performance, when using 512B alignment the performance wasn’t as good, however this wasn’t a problem with the Fusion-io cards.

Disclaimer

As with the previous tests the IO patterns I tested are not application realistic and do not demonstrate the mix of IO patterns that you would normally expect to see in a real production environment with multiple VM’s or different types sharing a datastore. The tests are for the purpose of demonstrating the limits of the different PCIe Flash cards when used at peak performance with four different fixed IO patterns. I tested 100% read 100% random, 0% read 100% random, 100% read 0% random, and 0% read 0% random. In real production workloads (shared datastore different VM types) you would not normally see this type of IO pattern, but rather a wide variety of randomness, read and write, and also different IO sizes. Except in the cases where one VM has multiple datastores or devices connected to it (big Oracle or SQL databases), in which case some of these patterns may be realistic. So consider the results at the top end of the scale and your milage may vary. Due to the technical differences between the Fusion-io based MLC cards and the Micron based SLC card this isn’t an apples for apples comparison. The tests were limited to short durations (60 seconds), so there was no opportunity to determine how the different cards perform with regard to endurance. Also not all of the cards capacity was used or overwritten during the tests.

IO Blazing Single VM Storage Performance

Random Read IOPS and Latency

Random Read Throughput

Random Write IOPS and Latency

Random Write Throughput

Sequential Read IOPS and Latency

Sequential Read Throughput

Sequential Write IOPS and Latency

Sequential Write Throughput

Conclusion

Given the differences in technology used between the Fusion-io and Micron PCIe Flash cards this isn’t a valid head to head comparison, it is very much apples to oranges. But what the testing shows is good performance from a single VM. It also shows the capabilities of the vSphere 5.0 hypervisor, especially given the high throughput and low latencies that were achieved. These tests show that vSphere is not an inhibitor to good performance when combined with good hardware.

In all of the tests with the exception of random write performance the single Micron 320h SLC exceeded the performance of the combined Fusion-io ioDrive1 and ioDrive2 cards from the single VM. This demonstrates the performance difference between MLC and SLC more than anything else, and great performance from the hypervisor. Latency was consistently below 1ms for both Fusion-io and Micron up to 16KB – 32KB IO size, at which point throughput saturation was reached. Maximum IOPS of over 200K for the single VM on the Micron card is impressive, especially at < 1ms latency. As is the maximum throughput of over 3GB/s read performance. The Fusion-io cards performed very well during random write tests, and read throughput of 2GB/s is very good. Fusion-io’s read and write IOPS > 100K is also very good.

The only queue depth option on the Fusion-io cards was 32, whereas on the Micron card the queue depth was 255. The difference in queue depth makes a big difference to performance and latency as IO workloads increase, with a larger queue depth the IO’s are not queued up in the kernel and are sent straight to the driver. This assumes the devices can handle the additional IO’s, which PCIe Flash Devices generally can. Don’t try adjusting the queue depth with your SAN arrays without very careful consideration as it can have a negative impact.

Unfortunately the Micron card doesn’t seem to work well at all with the 512B IO size sequential write IO pattern. Though this would be fairly rare in a real production environment where most workload patterns would be random and with larger IO sizes. During testing I also found that write performance suffers greatly on the Micron card when not using 4K aligned writes, as is the case with some other SSD’s. This makes it very important to ensure proper IO alignment at VMFS and Guest OS layer. This is not a problem that the Fusion-io cards suffer from as they handle natively 512B.

Raw throughput and IOPS aren’t the only consideration when it comes to PCIe Flash devices for all workloads. The ability to use the local PCIe Flash Device as near-line memory or cache as in the case of Fusion-io with ioTurbine is a major advantage for business critical applications (such as Oracle and MS SQL databases) and can increase consolidation ratios and therefore ROI without sacrificing performance. Also when using the local PCIe flash as cache in the case of a device failure there won’t be any data loss as writes are committed to the SAN or primary storage, so the only impact is reduced performance.

For some workloads that would be fine on a stand along VMFS datastore, such as floating pool VDI workloads both Fusion-io and Micron are credible choices. Both would also perform well when configured to swap to host cache. The Micron card clearly has an advantage in terms of raw throughput and especially with read performance due to it using SLC technology. One drawback the PCIe flash card models share is that they can’t be configured for RAID in hardware or in vSphere itself. So if you’re going to be using them as a VMFS datastore and storing data on them permanently you have a risk of data loss if the card fails. You should consider application level data protection if using the cards as a datastore, or use them for high performance data where the impact of data loss is low.

In summary all these tests also show us that the VMware vSphere hypervisor is not a bottleneck to storage performance, that VMFS is not a bottleneck to storage performance, and you can get stellar performance even when using thin provisioned VMDK’s. Remembering that none of the cards tested support VAAI the performance is excellent. If you need more performance just add more PCIe flash cards. During the tests the CPU utilization on the VM’s didn’t exceed 80% of the 6 vCPUs, so it’s clear the VM could have sustained higher throughput if the physical hardware could have supported it, and I could have always added more vCPU’s. VMware vSphere definitely is a great platform to run your business critical and high performance applications. I hope you found this interesting and useful and would welcome any feedback as always.

—

This post first appeared on the Long White Virtual Clouds blog at longwhiteclouds.com, by Michael Webster +. Copyright © 2013 – IT Solutions 2000 Ltd and Michael Webster +. All rights reserved. Not to be reproduced for commercial purposes without written permission.

First off, kudos on such a detailed review of these products and their performance. I've yet to use any local PCIe card in a design, mostly due to using blade technology quite frequently, and am curious if you have or plan to design around these cards in the future?

Hi Chris, thanks and Yes I do plan to use these in a design. I have a design for some business critical apps coming up and either these or local SSD's on a RAID card are options being considered (local SSD's much more expensive). The VM's that need the performance are protected from data loss at the application level. So they are good candidates for this type of technology. Also Oracle (or MS SQL) Databases are good candidates for the ioTurbine cacheing technology. You might be interested to know that most of the blade vendors have OEM agreements with Fusion-io and you can get the 'accelerator' cards as they're called installed in a mez slot in the blades. So these can be used both for VDI and also for business critical apps under the right circumstances, even in blades.