All Flash storage arrays are all popular at the moment and they are winning big business away from the traditional mission critical fibre channel arrays and making the traditional three tier architecture a little bit more simple. They offer great performance for scale up workloads where a small number of VM’s need lots of performance and they offer capacity savings by using data reduction techniques. The good all flash systems also offer consistent performance, low latency, even when under high load and near full capacity. However a little more simple than traditional three tier isn’t that simple (considering the FC management overheads), and it’s not yet making the infrastructure invisible. There are still quite a few limits. So what do you do if you want all flash, with unlimited scale out, and to support thousands of VM’s, or a small number of high performance VM’s, but with much simpler management than traditional three tier? Effectively making the infrastructure invisible?

The answer is pretty simple, just like the infrastructure will be, Nutanix. Nutanix has had an all flash solution for about a year now, and it’s been pretty popular with customers that want consistently high performance, predictable and low latency, for the top tier workloads. The NX9040 nodes combine 6 x Intel S3700 Enterprise Class SSD’s with 2 x E5 v2 Sockets and up to 512GB RAM. With this solution you get 2 Nodes in 2 rack units of space (allowing up to 364TB of raw flash storage per rack). This is a great platform for Oracle Databases, SQL Server, SAP, Temenos T24 Core Banking etc, and we have customers running all of these sorts of workloads. Especially when this is combined with the Nutanix Data Reduction and Data Avoidance techniques to give you more usable capacity from the same physical storage. Josh Odgers (NPX#001) wrote about one of these new techniques called EC-X recently.

Nutanix Data Reduction

Data Reduction includes things such as compression, data deduplication, and EC-X. Data Avoidance includes things such as VAAI integration, Thin Provisioning, and smart clones and snapshots, where data is not created unnecessarily or duplicated in the first place. For Databases and business critical apps Compression is recommended. The chances of dedupe being effective are less probable due to the large amount of different data. With these techniques it is possible to get more usable capacity than there is raw space available, but the milage will vary based on workload. 2:1, 3:1 or higher ratios are possible, just by using one technique, much higher ratios are also possible if the workload is friendly to the various techniques.

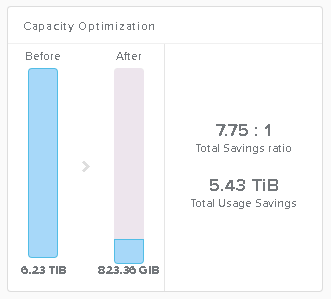

Where this doesn’t work so well is where a database is using Transparent Data Encryption (TDE) in the case of SQL Server or Advanced Security Encryption in the case of Oracle. Some platforms, including the NX9040 offer support for data encryption at rest, which may reduce the need to encrypt within the applications themselves. It’s difficult to effectively reduce the capacity of encrypted workloads, due to the randomness of the data. Below is an image that shows the data reduction measured from the Nutanix PRISM user interface from an Oracle RAC Database, running on an NX9040 cluster. This gives an example of what may be achieved, depending on workload of course. Your mileage may vary.

From the above image you can see that the database would consume 5.57TiB of capacity before data reduction, the Database itself is approximately 3TB in size, the overhead is due to data protection and data integrity measures. After data reduction the raw capacity used is only 1.95TiB. These savings are the result of compression only. But when combining compression with EC-X you could achieve higher levels of reduction. The larger the Nutanix cluster grows the more efficient the data reduction techniques become.

From the above image you can see that the database would consume 5.57TiB of capacity before data reduction, the Database itself is approximately 3TB in size, the overhead is due to data protection and data integrity measures. After data reduction the raw capacity used is only 1.95TiB. These savings are the result of compression only. But when combining compression with EC-X you could achieve higher levels of reduction. The larger the Nutanix cluster grows the more efficient the data reduction techniques become.

Here is another example of using compression, this time with Exchange JetStress Databases (Caution: JetStress is not a real world workload and the data reduction ratios may be higher than you would expect for real Exchange environments).

Most environments have test and development workloads along with production. The way the Nutanix environment works is that when you create a snapshot or clone of the workload the storage consumption is not increased. This is because no data has changed. This works just the same with ESXi and the (VMware API for Array Integration) VAAI when deploying from templates. You can deploy as many new VM’s in this manner and it doesn’t increase storage consumption until new data is written.

By using these techniques completely 100% valid copies of production can be used for testing and development and not take up any additional storage consumption. You can have a higher level of confidence that code will work when it’s migrated to production and you can always get back to a known consistent test environment state. All within seconds and without consuming more storage capacity.

You can read more about Nutanix Data Reduction and Data Avoidance techniques in the Nutanix Bible Distributed Storage Fabric section.

Nutanix All Flash Performance

As with data reduction and avoidance techniques performance varies by workload as well. It is very rare to have a single IO pattern from an application. So the best way to test performance is by using a real application. Real applications don’t generally generate just 4KB IO sizes either, so in the case of testing Nutanix All Flash Performance we use tools such as SLOB (The official SLOB page is here), Swingbench, Benchmark Factory for Databases and HammerDB. The examples I will show here are created using SLOB, as is the featured image.

When evaluating IO performance you can’t just review one metric out of context, else the review is invalid. Taking IOPS for example, it can change drastically with different IO sizes, you can have different latencies and throughput. So IOPS alone is not a valid metric. You need to combine it with IO size, latency, throughput and knowledge of the application being tested. In the case of the tests conducted against an Oracle RAC environment using SLOB the IO generated is lots of small reads, each read is 8KB, due to the database native page size. This IO pattern is also 100% random. Transaction log IO is far greater IO size and is sequential in nature.

In the following diagrams the system under test is a two node Oracle RAC Cluster (48 vCPU, 192GB RAM each node) running against a Nutanix cluster consisting of 2 x NX4170 nodes (4 x E5-4657L v2, 512GB RAM) and 4 x NX9040 nodes (2 x E5-2690 v2 512GB RAM). Both systems are using older generation Ivy Bridge processor technology from Intel. The hypervisor used is ESXi 5.5, later tests showed better performance when the hypervisor was upgraded to ESXi 6.0.

Oracle RAC SLOB IOPS

The image above shows two tests, one with a 30% update workload (first test) and one with 100% select workload. The update workload generates approximately 70K IOPS.

The image above shows two tests, one with a 30% update workload (first test) and one with 100% select workload. The update workload generates approximately 70K IOPS.

Oracle RAC SLOB Latency

During the different benchmarks you can see the latency on the image above. The update heavy test has higher latency of around 4ms, peaking at 7ms, the select heavy workload has latency of 0.45ms. The latency on subsequent testing was lower after the hypervisior being used was updated to the latest version.

Oracle RAC SLOB Throughput

As you can see from the above graph, during the update heavy test the throughput was approximately 800MB/s, during the select heavy workload the throughput was 1.13GB/s. Remember this is a Nutanix hyper-converged appliance, the total solution took up only 8 rack units of space for both the compute and the storage. In the near future Nutanix will be once again shrinking the physical space and power required to produce numbers like these, so you’ll need even less space, and have an even more simple environment for your all flash workloads.

Do You Need All Flash?

Whether you do or you don’t it’s really up to you. Nutanix has a compelling all flash option with the NX9040 and we will be building upon this for future platforms for even more extreme workloads. With the Nutanix platforms you get some flash in every node type. Data is then tiered between flash and hard disk at the block level depending on how frequently it is accessed. The decision whether all flash is required or not really comes down to predictability over the lifetime of the entire solution. If your applications only have hot data and you want to guarantee it is always in flash, then All Flash may be for you. The All Flash platforms have less overheads as there is no data tiering necessary, even though the data tiering is efficient and perfectly acceptable for most workloads.

At this point in time the best uses for all flash are databases, application servers where there is a low latency service guarantee required. Especially where there is an application licensing incentive to virtualize more systems per physical node to save money, as with more performance, you can virtualize more applications per node. Think Oracle and SQL Server.

Nutanix has recently announced a feature that allows flash pinning even on hybrid nodes. This is not yet released, but when it is, it will allow VM’s or virtual disks to be pinned to the flash tier. So this feature is likely to offer a middle ground between standard hybrid and all flash options. Increasingly over time though I predict most systems will move to predominantly flash with hard disk nodes only for snapshot, backup and archive.

Final Word

As the images in this article show, all flash in a hyper-convered web scale platform have great performance and are a viable option where simplicity of management, high performance, low latency, and unlimited scale out are required. Data reduction and avoidance techniques provide a way to get much more usable storage capacity form the platform, and they work just as well on Nutanix as they do on other all flash arrays. The only place this doesn’t fit is where you have a single extreme workloads or single VM that needs all of the performance of the array, and where you don’t plan to or can’t scale out. For those situations something like Pure Storage might be a good choice. For everything else, Nutanix has by far the simplest solution that offers great performance and unlimited scale.

—

This post first appeared on the Long White Virtual Clouds blog at longwhiteclouds.com. By Michael Webster +. Copyright © 2012 – 2015 – IT Solutions 2000 Ltd and Michael Webster +. All rights reserved. Not to be reproduced for commercial purposes without written permission.

[…] end storage (Such as Silly Little Oracle Benchmark – SLOB which was used for my article All Flash Performance on Web Scale Infrastructure). But these factors make it deal to test in a modern converged infrastructure and hyper-converged […]

Hello Michael, I enjoy seeing SLOB in use for platform testing. Can you please refer your readers to the main SLOB page though? That link would be: http://kevinclosson.net/slob/

[…] had first written about using SLOB in my article All Flash Performance on Web Scale Infrastructure. The tests there were based on using a single 2 Node Oracle RAC Cluster to drive load, whereas the […]

Assume that the VM on a all flash nutanix has only a single vdisk. When this VM performs disk IO, will Nutanix stripe the data to all local disks at the same time, or will it only store on local disk sequentially?

If it is the latter, what if the VM has multiple vdisks? I wonder if RAID on a local disk is feasible?

Data is accessed by extents and extent groups, which are spread over multiple physical disks. There is no RAID used in the system on local disks as it isn't necessary. Data is protected by replicating it across the cluster. If a checksum failure, or other event means any single copy of data becomes unavailable it will be repaired transparently. If a VM has multiple vDisks it will split IO across those disks and allow for more queues in the OS, there are also more threads available to work on the vDisks at the back end, which results in better performance. The Nutanix platform is designed like a public cloud, so that no single operation can monopolise system resources. To get the best performance multiple disks can help for large databases as an example. This is similar to how MS Azure and AWS work as well.